In the pursuit of staying competitive, model releases continue at a rapid pace: GPT-5.2 marks OpenAI’s third significant model release since August. GPT-5 debuted that month featuring a novel routing system that alternates between instant-response and simulated reasoning modes, although users expressed dissatisfaction regarding responses that felt too detached and mechanical. The November GPT-5.1 update introduced eight preset “personality” selections while aiming to enhance the conversational quality of the system.

Statistics on the rise

Interestingly, although the GPT-5.2 model release appears to be a reaction to Gemini 3’s capabilities, OpenAI opted not to provide any comparative benchmarks on its promotional site between the two models. Instead, the official blog post emphasizes the advancements of GPT-5.2 over its earlier versions and its performance on OpenAI’s newly established GDPval benchmark, which seeks to evaluate professional knowledge work across 44 job categories.

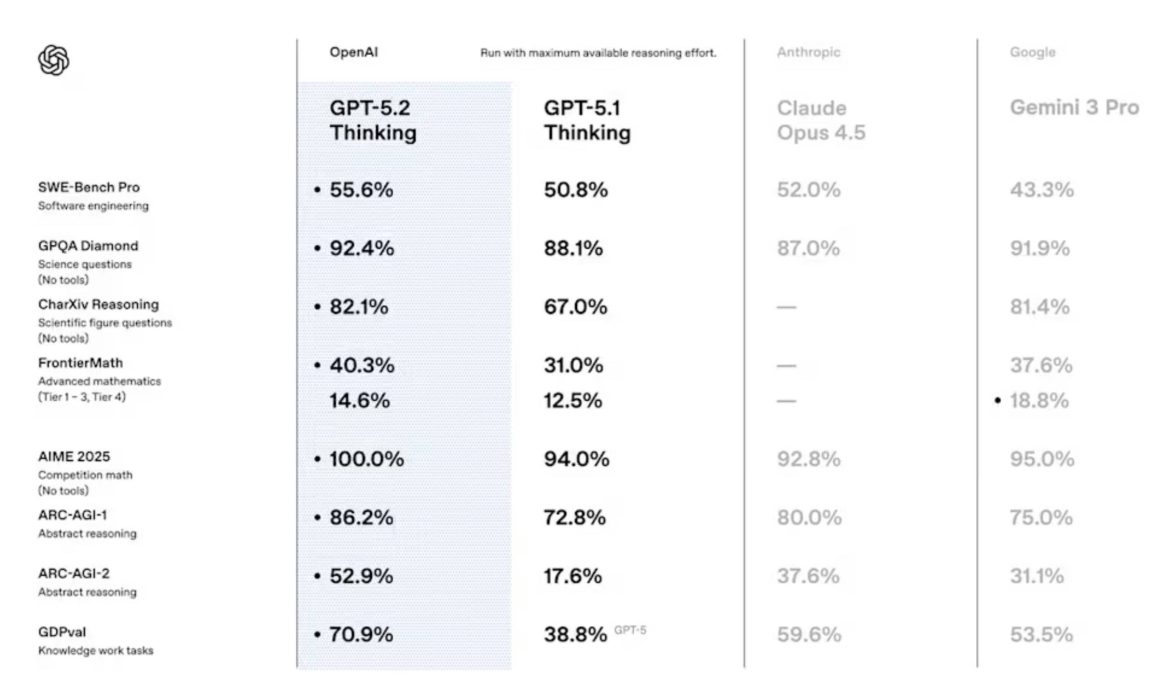

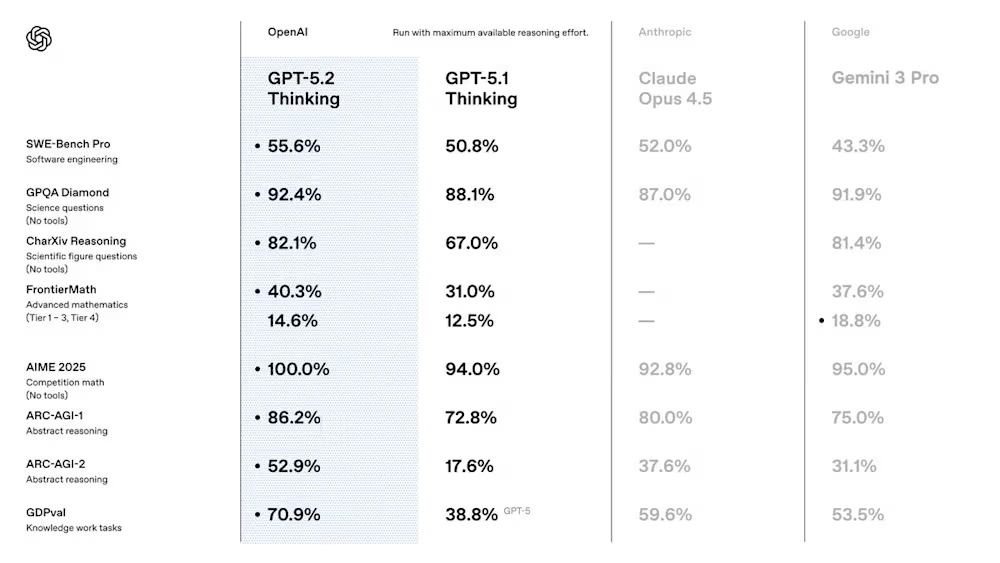

During the media briefing, OpenAI did present some comparative benchmarks that included Gemini 3 Pro and Claude Opus 4.5, but refuted the narrative suggesting that GPT-5.2 was hurried to the market due to Google. “It is crucial to note this has been in development for a prolonged period,” Simo informed reporters, although we should mention that the timing of its release is a deliberate strategy.

As per the provided statistics, GPT-5.2 Thinking achieved a score of 55.6 percent on SWE-Bench Pro, a software engineering standard, in contrast to 43.3 percent for Gemini 3 Pro and 52.0 percent for Claude Opus 4.5. On GPQA Diamond, a graduate-level science evaluation, GPT-5.2 obtained 92.4 percent, while Gemini 3 Pro scored 91.9 percent.

OpenAI asserts that GPT-5.2 Thinking outperforms or equals “human professionals” on 70.9 percent of tasks in the GDPval benchmark (in comparison to 53.3 percent for Gemini 3 Pro). The company also claims that the model executes these tasks more than 11 times faster and at less than 1 percent of the cost of human experts.

Reportedly, GPT-5.2 Thinking generates replies with 38 percent fewer inaccuracies than GPT-5.1, according to Max Schwarzer, OpenAI’s head of post-training, who declared to VentureBeat that the model “hallucinates considerably less” than its predecessor.

Nevertheless, we always approach benchmarks cautiously because it’s simple to present them favorably for a company, particularly when the methodology for objectively assessing AI performance has not completely aligned with corporate sales narratives for humanlike AI abilities.

Independent benchmarking results from researchers outside of OpenAI will require time to be published. For now, if you utilize ChatGPT for professional tasks, anticipate competent models with gradual enhancements and some improved coding capabilities included for good measure.