This context ceiling inherently restricts how much of a codebase a LLM can handle in a single pass, and supplying the model with many large code files (which must be reprocessed by the LLM each time you send another response) can quickly eat through token or usage limits.

Practical techniques

To work around these constraints, creators of coding agents rely on several strategies. For instance, AI models are fine-tuned to generate code that delegates tasks to other software tools. They might emit Python scripts to pull data from images or files instead of sending the entire file through an LLM, saving tokens and reducing the chance of incorrect outputs.

Anthropic’s documentation notes that Claude Code also adopts this pattern to carry out complex data analysis across large databases, crafting focused queries and using Bash utilities like “head” and “tail” to inspect big volumes of data without ever loading the full data objects into context.

(In some respects, these AI agents are directed yet semi-autonomous tool-using programs that substantially extend a concept we first encountered in early 2023.)

A further major advance in agents came from dynamic context management. Agents achieve this in various ways not fully disclosed in proprietary coding models, but the primary technique they employ is context compression.

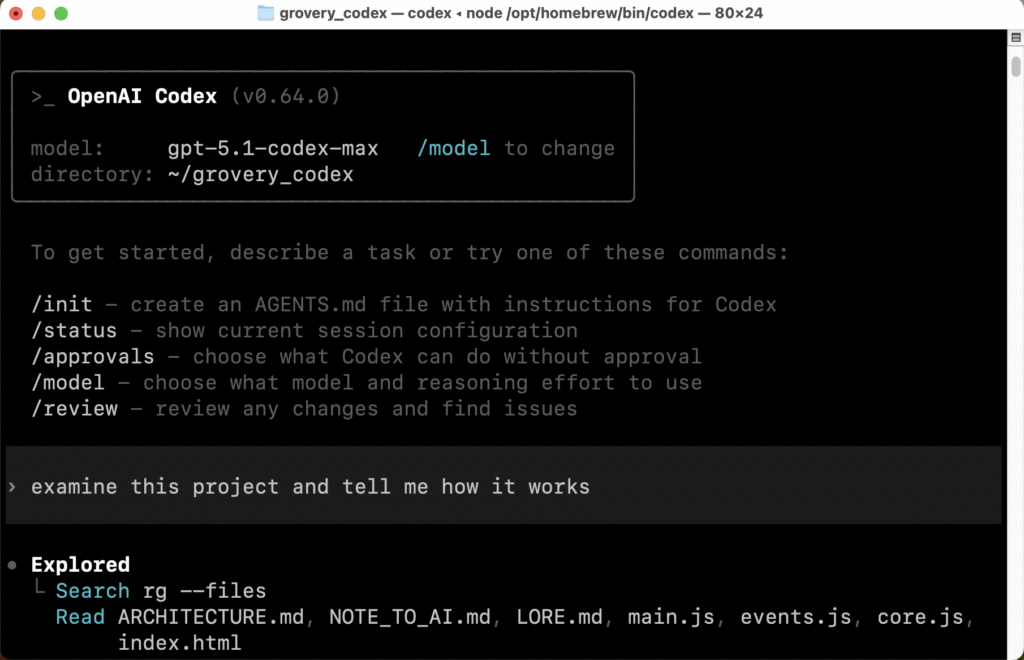

The command-line edition of OpenAI Codex operating in a macOS terminal window.