Amidst the current buzz surrounding AI bubble speculation floating around, with anxieties about excessive investments that might burst unexpectedly, an intriguing contradiction is emerging on the ground: Organizations like Google and OpenAI are struggling to expand their infrastructure swiftly enough to meet their AI demands.

At a recent all-hands meeting, Google’s head of AI infrastructure, Amin Vahdat, informed staff that the firm must double its service capacity every six months to keep pace with the demand for artificial intelligence offerings, CNBC reports. Vahdat, who holds a vice president position at Google Cloud, showcased slides indicating that the enterprise needs to scale “the next 1000x in 4-5 years.”

While a thousandfold enhancement in compute capacity might seem lofty on its own, Vahdat pointed out several crucial limitations: Google must deliver this enhancement in ability, compute, and storage networking “for essentially the same cost and increasingly, the same power, the same energy level,” he communicated to staff during the meeting. “It won’t be easy but through teamwork and co-design, we’re going to achieve it.”

It remains uncertain how much of this “demand” mentioned by Google reflects genuine user enthusiasm for AI functionalities versus the firm incorporating AI elements into pre-existing services such as Search, Gmail, and Workspace. Regardless of whether users actively engage with the features or not, Google isn’t the sole tech firm grappling with the challenge of keeping pace with an expanding clientele utilizing AI services.

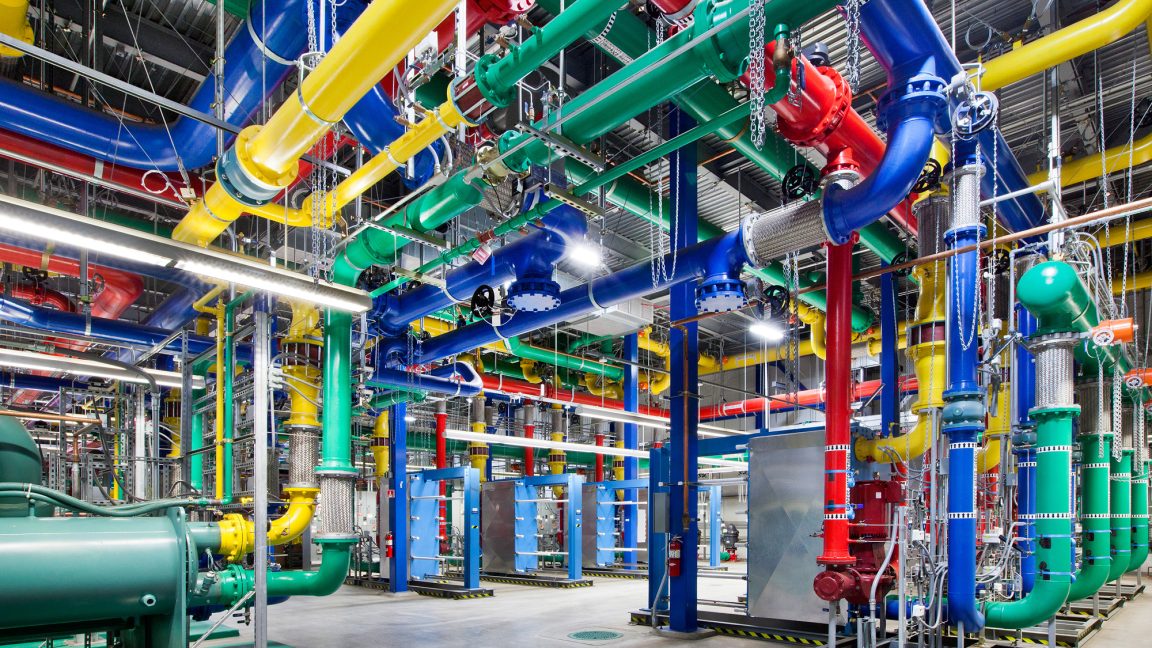

Key technology firms are engaged in a race to establish data centers. Google rival OpenAI is planning to construct six enormous data centers throughout the US via its Stargate partnership initiative with SoftBank and Oracle, allocating over $400 billion in the next three years to attain nearly 7 gigawatts of capacity. The enterprise encounters similar challenges serving its 800 million weekly ChatGPT users, with even paid subscribers frequently reaching usage ceilings for functionalities like video synthesis and simulated reasoning models.

“The competition in AI infrastructure is the most crucial and also the most costly aspect of the AI race,” Vahdat stated during the meeting, according to CNBC’s coverage of the presentation. The infrastructure executive clarified that Google’s challenge extends beyond merely outspending rivals. “We’re going to invest significantly,” he remarked, but emphasized that the true goal is to create infrastructure that is “more reliable, more performant, and more scalable than what’s accessible anywhere else.”