AI and quantum technologies are significantly altering the landscape of cybersecurity, changing both the speed and the scale of operations for digital defenders and their adversaries.

The deployment of AI tools for cyberattacks is already demonstrating itself as a formidable challenge to existing defenses. From reconnaissance to ransomware, threat actors can leverage AI to automate their assaults more quickly than ever. This encompasses using generative AI to orchestrate social engineering schemes on a massive scale, producing tens of thousands of tailored phishing emails in mere seconds, or utilizing readily accessible voice cloning software to bypass security measures for a fraction of the cost. Furthermore, agentic AI escalates the scenario by enabling autonomous systems that can reason, respond, and adapt like human opponents.

However, AI is not the sole driver shaping the threat environment. Quantum computing has the potential to severely compromise current encryption standards if it continues to develop unregulated. Quantum algorithms have the capability to resolve the mathematical challenges that underpin most contemporary cryptographic systems, especially public-key systems like RSA and Elliptic Curve, which are commonly employed for secure online communication, digital signatures, and cryptocurrency.

“We understand that quantum technology is on the horizon. When it arrives, it will necessitate a transformation in how we secure data throughout all sectors, including governments, telecommunications, and financial institutions,” states Peter Bailey, senior vice president and general manager of Cisco’s security division.

“Many organizations are rightly concentrating on the urgent nature of AI threats,” Bailey notes. “Quantum may seem like a distant concept, but those possibilities are approaching quicker than most realize. It’s imperative to start investing now in protections that can endure both AI and quantum threats.”

Central to this defensive stance is a zero trust model for cybersecurity, which operates on the principle that no user or device can be assumed to be inherently trustworthy. By enforcing ongoing verification, zero trust facilitates continuous monitoring and guarantees that any attempts to exploit weaknesses are swiftly detected and managed in real time. This methodology is technology-agnostic and establishes a robust framework capable of withstanding a perpetually evolving threat landscape.

Establishing AI defenses

AI is reducing the entry barriers for cyberattacks, allowing hackers, even those with minimal skills or resources, to breach, manipulate, and exploit even the smallest digital weaknesses.

Close to three-quarters (74%) of cybersecurity professionals indicate that AI-enabled threats are currently having a noticeable effect on their organizations, with 90% expecting such threats within the next one to two years.

“AI-driven adversaries utilize sophisticated techniques and function at machine speeds,” says Bailey. “The only way to keep up is to leverage AI for automated responses and to defend at machine speeds.”

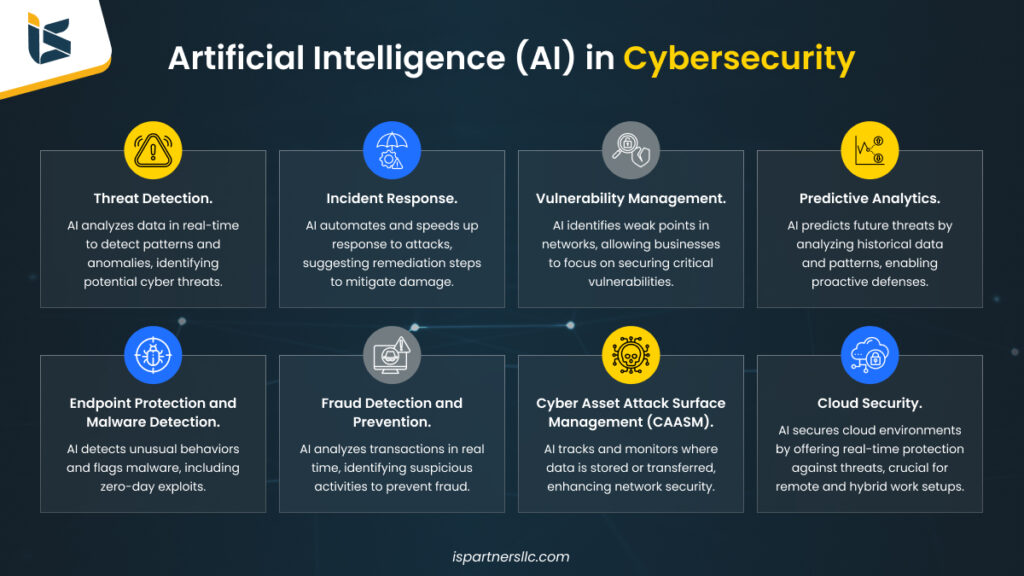

To accomplish this, Bailey suggests that organizations must upgrade their systems, platforms, and security operations to automate threat detection and response—processes that previously depended on human rule creation and reaction times. These systems should have the capability to adapt dynamically as environments change and criminal strategies evolve.

Simultaneously, businesses must enhance the security of their AI models and data to minimize exposure to manipulation from AI-driven malware. Such threats could encompass, for example, prompt injections, where a malicious actor crafts a prompt that coercively directs an AI model into executing unintended actions, sidestepping its original guidelines and safeguards.

Agentic AI intensifies the challenge, enabling hackers to employ AI agents to automate assaults and make strategic decisions without constant human oversight. “Agentic AI could drastically reduce the cost of executing attacks,” Bailey observes. “This implies that common cybercriminals could initiate campaigns that, until now, were feasible only for well-funded espionage operations.”

Organizations, in turn, are investigating how AI agents can assist them in maintaining an edge. Almost 40% of firms expect agentic AI to supplement or support teams within the next year, particularly in cybersecurity, as stated in Cisco’s 2025 AI Readiness Index. Applications involve AI agents trained on telemetry that can pinpoint anomalies or signals from machine data that are too varied and unstructured for human interpretation.

Evaluating the quantum threat

While many cybersecurity groups focus on the very real AI-driven threats, quantum technology lingers on the periphery. Nearly three-quarters (73%) of US organizations surveyed by KPMG believe it’s only a matter of time before cybercriminals utilize quantum computing to decrypt and undermine today’s cybersecurity measures. Yet, a significant majority (81%) also concede they could improve their measures to secure their data.

Businesses are justified in their concerns. Threat actors are already conducting harvest now, decrypt later cyberattacks, hoarding sensitive encrypted information to decode once quantum technologies mature. Instances include state-sponsored groups intercepting government communications and networks of cybercriminals retaining encrypted online traffic or financial information.

Major tech companies are among the first to implement quantum defenses. For instance, Apple utilizes cryptography protocol PQ3 to protect against harvest now, decrypt later assaults on its iMessage system. Google is experimenting with post-quantum cryptography (PQC)—which is resilient against attacks from both quantum and classical computing—in its Chrome browser. Furthermore, Cisco “has made significant investments in securing our software and infrastructure against quantum threats,” remarks Bailey. “We can expect more enterprises and governments to adopt similar strategies in the coming 18 to 24 months,” he adds.

As regulations such as the US Quantum Computing Cybersecurity Preparedness Act outline requirements for counteracting quantum threats, including standardized PQC algorithms mandated by the National Institute of Standards and Technology, a broader array of organizations will begin preparing their quantum defenses.

For organizations embarking on this journey, Bailey recommends two critical actions. First, ensure visibility. “Be aware of what data you possess and where it’s stored,” he advises. “Inventory your data, evaluate sensitivity, and audit your encryption keys, replacing any that are vulnerable or outdated.”

Secondly, plan for migration. “Next, assess what will be necessary to support post-quantum algorithms across your infrastructure. This involves addressing not just the technology but also the implications for processes and personnel,” Bailey indicates.

Embracing proactive defense

Ultimately, the groundwork for building resilience against both AI and quantum threats rests on adopting a zero trust framework, according to Bailey. By embedding zero trust access protocols across users, devices, business applications, networks, and clouds, this strategy permits only the essential access necessary to perform tasks and allows for ongoing monitoring. It can also minimize the attack surface by confining potential threats to isolated zones, preventing them from infiltrating other critical systems.

Into this zero trust architecture, organizations can incorporate specific measures to guard against AI and quantum risks. For example, employing quantum-proof cryptography and AI-driven analytical and security tools can help identify intricate attack patterns and automate real-time responses.

“Zero trust mitigates attack vectors and bolsters resilience,” says Bailey. “It ensures that even if a breach happens, the most critical assets remain safeguarded and business operations can recover swiftly.”

Ultimately, organizations should not await the emergence and evolution of threats. They must proactively position themselves. “This isn’t a hypothetical scenario; it’s an inevitable occurrence,” Bailey asserts. “Entities that invest early will be the frontrunners, not those scrambling to catch up.”

This content was produced by Insights, the custom content division of MIT Technology Review. It was not authored by the editorial team of MIT Technology Review. It was developed, designed, and written by human writers, editors, analysts, and illustrators. This encompasses the drafting of surveys and the collection of data for surveys. AI tools that may have been utilized were restricted to secondary production processes that underwent thorough human oversight.