Zoe KleinmanTechnology editor

Zoe KleinmanTechnology editor BBC

BBCMark Zuckerberg is reported to have begun construction on Koolau Ranch, his vast 1,400-acre estate on the Hawaiian island of Kauai, as early as 2014.

It is expected to feature a shelter with its own energy and food resources, although carpenters and electricians working on the project were instructed not to discuss it due to non-disclosure contracts, according to a Wired magazine report. A six-foot barrier obscured the project from the view of a nearby roadway.

When asked last year if he was constructing a doomsday bunker, the Facebook co-founder simply replied “no.” He clarified that the underground space, measuring approximately 5,000 square feet, is “just like a little shelter, it’s like a basement.”

This has not quelled the rumors—similarly regarding his acquisition of 11 properties in Palo Alto’s Crescent Park neighborhood in California, which reportedly includes a 7,000 square feet underground facility beneath.

While his construction permits refer to basements, some neighbors have dubbed it a bunker. Or even, a billionaire’s bat cave.

Bloomberg via Getty Images

Bloomberg via Getty ImagesAdditionally, there is intrigue surrounding other tech billionaires, some of whom seem to be actively acquiring parcels of land featuring underground facilities, potentially ripe for conversion into extravagant luxury bunkers.

Reid Hoffman, co-founder of LinkedIn, has referred to this as “apocalypse insurance.” He has previously stated that around half of the ultra-wealthy possess such plans, with New Zealand being a favored locale for retreats.

So, are they genuinely preparing for warfare, the ramifications of climate change, or another catastrophic scenario that remains unknown to the rest of society?

Getty Images

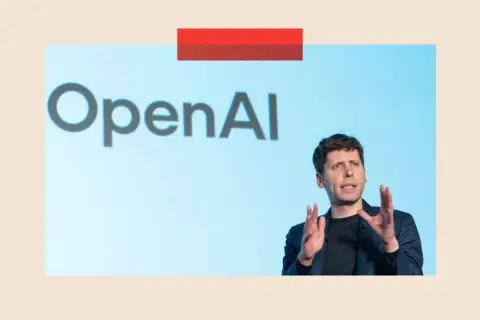

Getty ImagesIn recent years, the rapid advancement of artificial intelligence (AI) has intensified concerns about potential existential threats. Many are increasingly anxious about the pace of development.

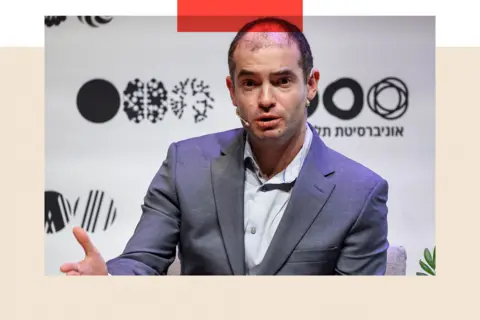

Ilya Sutskever, chief scientist and co-founder of the tech company OpenAI, is reportedly among this group.

By mid-2023, the San Francisco-based organization had launched ChatGPT—a chatbot now utilized by hundreds of millions globally—and was hastening updates.

However, by that summer, Mr. Sutskever was increasingly convinced that computer scientists were on the verge of creating artificial general intelligence (AGI)—the stage where machines rival human intellect—according to journalist Karen Hao’s book.

In a meeting, Mr. Sutskever reportedly recommended that colleagues should excavate an underground shelter for the company’s leading scientists before such formidable technology was unleashed on society, as noted by Ms. Hao.

“We’re certainly going to construct a bunker before we launch AGI,” he is widely claimed to have said, though it remains unclear what he meant by “we.”

AFP via Getty Images

AFP via Getty ImagesThis reveals a curious truth: many prominent computer scientists striving to create an exceptionally intelligent AI seem profoundly fearful of its future implications.

So, when exactly—if at all—will AGI emerge? And could it truly be transformative enough to instill fear in ordinary individuals?

An arrival ‘sooner than we expect’

Tech moguls assert that AGI is on the horizon. OpenAI’s Sam Altman stated in December 2024 that it will arrive “sooner than most people anticipate in the world.”

Sir Demis Hassabis, co-founder of DeepMind, has speculated it could come within the next five to ten years, while Anthropic’s Dario Amodei noted last year that his preferred term—”powerful AI”—might emerge as early as 2026.

Others, however, are skeptical. “They constantly change the expectations,” remarks Dame Wendy Hall, professor of computer science at Southampton University. “It varies depending on who you consult.” Although we’re on the phone, I can almost sense her eye-roll.

“The scientific community acknowledges that AI technology is astounding,” she continues, “but it is far from achieving human intelligence.”

Multiple “fundamental breakthroughs” would be necessary first, agrees Babak Hodjat, the chief technology officer of Cognizant.

Moreover, it is improbable that AGI will manifest as a solitary event. Instead, AI is a rapidly evolving field, and numerous companies across the globe are competing to develop their own iterations of it.

However, one reason this concept excites some within Silicon Valley is its perceived status as a precursor to an even more advanced state: ASI, or artificial superintelligence—technology that exceeds human cognitive abilities.

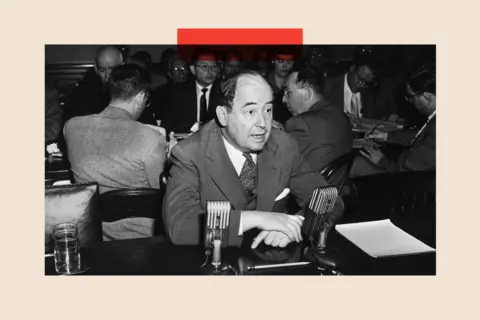

The idea of “the singularity” was posthumously attributed to Hungarian-born mathematician John von Neumann in 1958. It signifies the instant when computer intelligence surpasses human comprehension.

Getty Images

Getty ImagesMore recently, the 2024 publication Genesis, authored by Eric Schmidt, Craig Mundy, and the late Henry Kissinger, delves into the notion of a super-powerful technology that becomes so adept at decision-making and leadership that we relinquish full control to it.

They argue it is a matter of when, not if.

Money for all, without needing a job?

Proponents of AGI and ASI are almost zealous about its potential benefits. They argue it will discover new treatments for deadly illnesses, tackle climate change, and create an inexhaustible source of clean energy.

Elon Musk has suggested that super-intelligent AI could usher in an age of “universal high income.”

He recently advocated that AI will become so economical and widespread that nearly everyone will want their own “personal R2-D2 and C-3PO” (referring to the droids from Star Wars).

“Everyone will receive the best healthcare, food, home transportation, and everything else. Sustainable abundance,” he proclaimed enthusiastically.

AFP via Getty Images

AFP via Getty ImagesHowever, a concerning aspect remains. Could this technology be exploited by extremists and turned into a massive weapon, or what if it autonomously concludes that humanity is the root cause of the world’s dilemmas and eradicates us?

“If it outsmarts you, then we must keep it under control,” cautioned Tim Berners-Lee, the creator of the World Wide Web, during a discussion with the BBC recently.

“We have to ensure we can deactivate it.”

Getty Images

Getty ImagesGovernments are implementing certain precautionary measures. In the U.S., where many top AI firms are located, President Biden enacted an executive order in 2023 mandating specific companies to submit safety test outcomes to the federal government. However, President Trump has since rescinded some aspects of this order, deeming it a “blockage” to innovation.

Meanwhile, in the UK, the AI Safety Institute—a research organization funded by the government—was established two years ago to gain a better grasp of the risks posed by advanced AI.

Additionally, there are several ultra-wealthy individuals with their own disaster preparedness plans.

“Claiming you’re ‘buying a house in New Zealand’ is somewhat of a wink, wink, say no more,” Reid Hoffman previously noted. The same could likely be said of bunkers.

However, a distinctly human flaw arises.

I once encountered a former bodyguard of a billionaire with his own “bunker,” who informed me that the first priority for his security team, in the event of such a situation, would be to eliminate their boss and secure the bunker for themselves. He did not seem to be jesting.

Is it all alarmist nonsense?

Neil Lawrence, a professor of machine learning at Cambridge University, believes this entire discussion is nonsensical.

“The idea of Artificial General Intelligence is as ridiculous as referring to an ‘Artificial General Vehicle’,” he states.

“The appropriate vehicle is contingent on context. I used an Airbus A350 to fly to Kenya, I use a car to reach my university each day, and I walk to the cafeteria… There isn’t a single vehicle capable of handling all of this.”

For him, discussions surrounding AGI are a diversion.

Smith Collection/Gado/Getty Images

Smith Collection/Gado/Getty Images“The technology we now possess [already] enables, for the first time, routine individuals to converse with a machine and potentially command it to do their bidding. That is absolutely remarkable… and fundamentally transformative.

“The significant concern is that we are so engrossed in the tech industry’s narratives regarding AGI that we overlook the areas where improvements are essential for people.”

Current AI instruments are trained on extensive datasets and excel at recognizing patterns: whether detecting tumor indications in scans or predicting the next word in a specific sequence. Yet, they do not possess “feelings,” regardless of how convincing their responses may seem.

“There are some ‘cheaty’ methods to make a Large Language Model (the foundation of AI chatbots) behave as if it has memory and learns, but these solutions are unsatisfactory and significantly inferior to humans,” remarks Mr. Hodjat.

Vince Lynch, CEO of the Californian IV.AI, is also skeptical of exaggerated claims about AGI.

“It’s superb marketing,” he argues. “If you’re the company developing the smartest creation ever, people will be inclined to direct their funds toward you.”

He adds, “It’s not a two-year matter. It calls for immense computing power, significant human ingenuity, and numerous trials and errors.”

When asked if he believes AGI will ever materialize, there’s a lengthy silence.

“I truly don’t know.”

Intelligence without consciousness

In certain aspects, AI has already surpassed human minds. A generative AI tool can excel in medieval history one moment and tackle intricate mathematical problems the next.

Some technology firms claim they do not always comprehend why their products behave as they do. Meta reports there are indications of its AI systems evolving independently.

Getty Images News

Getty Images NewsIn the end, no matter how intelligent machines may become, biologically the human brain still prevails.

The human brain comprises approximately 86 billion neurons and 600 trillion synapses, far surpassing the artificial counterparts. The human brain doesn’t pause between interactions and continually adapts to new information.

“When you inform a human that life has been discovered on an exoplanet, they will immediately assimilate that knowledge, and it will shape their perspective moving forward. For a Large Language Model (LLM), they will only retain that fact as long as you continually present it to them,” states Mr. Hodjat.

“LLMs also lack meta-cognition, meaning they do not fully understand what they know. Humans possess an introspective capability, sometimes referred to as consciousness, that enables them to comprehend their knowledge.”

This element is a crucial facet of human intelligence—one that has yet to be replicated in a laboratory.

Top picture credits: The Washington Post via Getty Images/ Getty Images MASTER. Lead image features Mark Zuckerberg (below) alongside a stock image of an unidentified bunker in an unknown location (above)

BBC InDepth serves as the hub on the website and app for exceptional analysis, featuring fresh viewpoints that challenge assumptions and in-depth reporting on pivotal issues of the moment. Additionally, we highlight thought-provoking content across BBC Sounds and iPlayer. You can provide us with your feedback regarding the InDepth section by clicking the button below.